Can a piece of writing suffer from too much feedback?

Writing, it has often been said, is a conversation, so critiques are essential to the process. This is both the lore and the law of writing. But whose notes should we take note of?

Ebook reader retailers collect precise reading data – down to specific pages read and assorted usage statistics. As ebook sales continue to increase such intricate data is going to play an increasingly vital role for publishers. (For a chart on privacy and ereaders see the EFF’s guide; it’s a little old but still worthwhile).

But who does this data benefit?

I read almost all my ebooks through Amazon. Recently my purchases have been nonfiction books. Every few page turns (clicks), I’m greeted by underlined passages with statements such as: 9 highlighters! At first it reminded me of the usefully annotated library books I’ve borrowed – until I reflected on the horror of all that shared reading data. It is the literary equivalent of showing Kmart your underwear drawer.

Right now there are countless writing courses and writing groups providing feedback on written pieces. It is, for most people, an organic process. Many writers start with a word processor and let it correct the odd spelling mistake or grammar error, but from that point on crafting advice is taken from other people. Once finished with the workshopping group/class, the piece will find its way to a reader and, possibly, an editor. And that isn’t even the final round. The bound-up publication will end with a reading public, and the reviews will let you know the rest.

That’s the long lifecycle of the printed word: plenty of feedback, but plenty of time to digest it too.

But if you publish digitally your turnaround time is a lot quicker. Bloggers and others who share their writing online often use analytics systems, like Google Analytics, to view the number of people who read their posts, how long they spend on that post, where they came from and where they went after they read that particular post. It’s easy to see how they read your website and, based on how long they spend there, whether they found your writing of interest or not. Comments give the blogger concrete feedback, clarifying or arguing points.

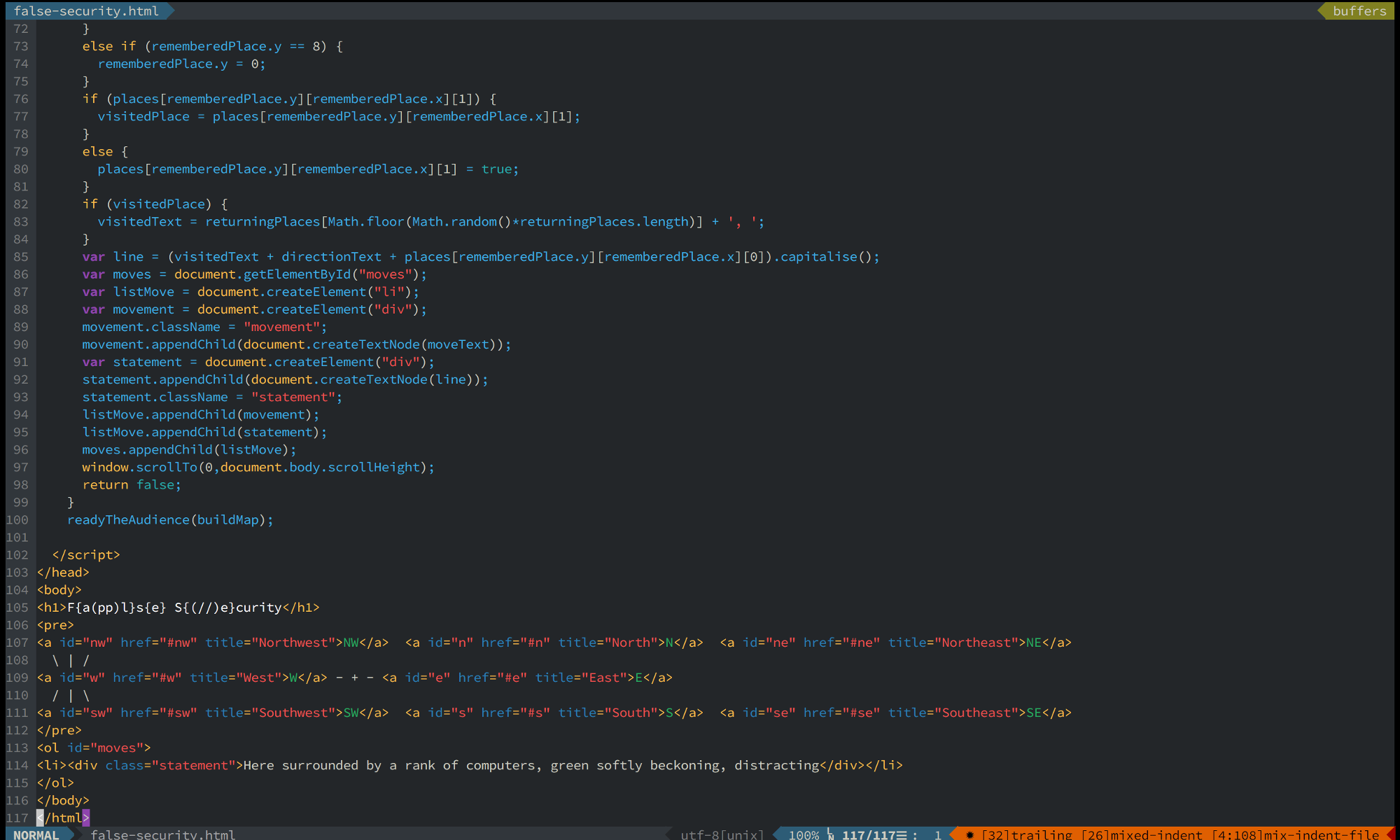

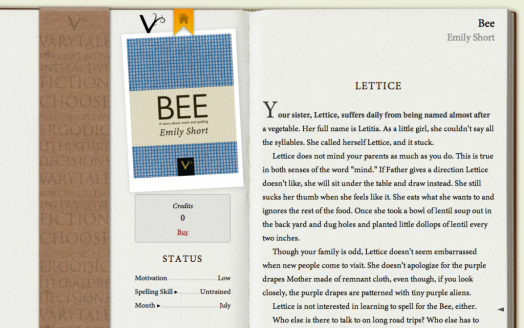

Online workshopping platforms also allow writers to share and discuss their writing and receive critiques. Other systems actually allow people to vote directly on your writing. Varytale, an interactive book system that lets readers both vote and comment on individual scenes, is a writing platform that does exactly this, claiming that:

Our tools show you exactly what readers are reading and enjoying about your book. You can start with a short story, and see how successful it is within a matter of days, adding and expanding content as readers demand it.

Is this useful for the writer?

In a recent blog post on using Varytale to write the interactive story Bee, Emily Short described the experience of receiving feedback on each story fragment. Short noted that readers voted the culmination scenes higher than the build-up remarking, ‘the build-up isn’t as inherently punchy and memorable, but that’s because it has a different job to do! That doesn’t mean it doesn’t belong in the story’.

While web-based analytics are still considered a novelty for writers, Australian publishers have access to book sales statistics courtesy of the Nielsen BookScan. In their dynamic banner, Neilsen state that they provide ‘online actionable sales information’. (Presumably so that publishers can ‘take action’.) Most books purchased from booksellers go into their database and from that, BookScan compiles the bestseller lists and various other data collections. But this is where the data ends; BookScan cannot report on what the purchaser thinks of, or does with, the book once they’ve left the store.

And therein lies the problem.

In publishing there exists a tendency to confuse book sales with book worth and any response as quality response. And let’s face it, sales data may not be the healthiest feedback for authors to obsess over. When Amazon, through Author Central, released the Nielsen Bookscan print sales to authors, some joked that this would provide yet another site for authors to constantly click refresh on. Although Emily Short wrote that in the end she found the feedback beneficial, I think there will always be a difference between what authors, readers and publishers find useful in data analytics. Of course there may be overlap, but an author could, for instance, be writing for a very small audience, while a publisher wants the book to sell as widely as possible.

So what might a publisher do with chapter-by-chapter and page-by-page purchasing and reading statistics? Like the ominous ‘actionable sales information’, it implies that some action be taken – perhaps less literary fiction and more cookbooks? More celebrity biographies? What will readers do? Perhaps they’ll only read the chapters concerning the scandals and not the boring build-up? And what effects will all of this data have on authors? If they are literally shown the ‘boring bits’ of their works, the sections where readers stopped reading, or the chapters which were skipped (I actually know someone who skips chapters), might they not try to include more action and less build up in their next work?

Maybe, each year in time for Christmas, we’ll see anthologies such as The Year’s Highest-Rated Chapters or, even worse, The Year’s Highest-Rated Pages. Published by Amazon, of course.

This post was originally published on the Overland literary journal website as part of the Meanland project: The literary equivalent of your underwear drawer.